Finding a Needle in a Haystack: Understanding Search Algorithms and Optimizing Space Complexity

Introduction

In today’s digital age, data is king. From e-commerce to social media to scientific research, data is the backbone of many industries. However, with vast amounts of data comes the need for efficient search algorithms that can quickly find what we’re looking for.

In this article, we’ll explore the fascinating world of search algorithms and the crucial role that space complexity plays in determining their effectiveness.

Search Algorithms

Search algorithms are used to find specific items in a collection of data. They can be used in a variety of applications, from searching for a specific word in a document to finding the shortest path between two cities on a map. Search algorithms can be made faster or more efficient by specially constructed database structures, such as search trees, hash maps, and database indexes.

The effectiveness of a search algorithm is determined by several factors, including time complexity, space complexity, and the nature of the data being searched.

Examples of Search Algorithms

1. Linear Search:

Linear search is a simple search algorithm that checks each item in a collection until it finds the target value. This algorithm is often used for small collections or when the collection is unsorted.

2. Binary Search:

Binary search is a more efficient search algorithm that is used when the collection is sorted. This algorithm splits the collection in half at each step and only searches the half that may contain the target value.

3. A Search*:

A* search is an advanced search algorithm that is often used in pathfinding applications, such as finding the shortest path between two cities on a map. This algorithm uses a heuristic to guide the search toward the goal, evaluating the cost of each path and choosing the most promising path to explore next.

There are several other types of search algorithms, each with its own advantages and disadvantages. For example, breadth-first search is often used when we want to find the shortest path between two points, while depth-first search is useful when we want to find all possible paths between two points.

Another important consideration when selecting a search algorithm is the data structure being searched. For example, if we are searching through a binary tree, we can use a binary search algorithm to efficiently find the desired node. On the other hand, if we are searching through an unsorted list, we may need to use a linear search algorithm.

Search algorithms are an essential tool for navigating and searching through large collections of data. The selection of a suitable search algorithm depends on several factors, including the nature of the data being searched, the desired outcome, and the available resources. By understanding the different types of search algorithms and their associated time and space complexities, we can select the most appropriate algorithm for our needs and improve the efficiency of our applications.

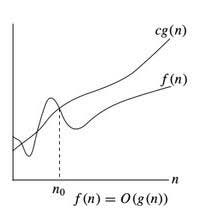

Time Complexity

Time complexity is a measure of the amount of time it takes for an algorithm to complete. The time complexity of a search algorithm is typically measured in terms of the number of comparisons or operations it performs. For example, a linear search algorithm will perform one comparison for each item in the collection being searched, giving it a time complexity of O(n), where n is the number of items in the collection. Binary search, on the other hand, has a time complexity of O(log n), where n is the number of items in the collection because it splits the collection in half at each step.

Space Complexity

Space Complexity

Most often Space complexity is misused with Auxiliary Space.

Auxiliary Space is the temporary or extra space used by an algorithm.

Space complexity is a measure of the amount of memory an algorithm requires to complete with respect to the input size. Space complexity contains both the auxiliary space and the spcar used by the input. This is an important consideration in many applications, particularly those where memory is limited. The space complexity of a search algorithm is typically measured in terms of the amount of memory required to store the data being searched, plus any additional memory required by the algorithm itself.

For example, a linear search algorithm has a space complexity of O(1), because it only needs to store the current item being searched. On the other hand, binary search has a space complexity of O(n), because it needs to store the entire collection being searched.

Space complexity is a parallel concept to time complexity. If we need to create an array of size n, this will require O(n) space. If we create a two-dimensional array of size n*n, this will require O(n2) space.

In conclusion, it is necessary to mention that space complexity depends on a variety of things such as the programming language, the compiler, or even the machine running the algorithm.